Building Cloud Services: Sessions, Scaling and IaaS VS PaaS

Link to project here.

In this blog, we'll go through some basic design considerations when looking into building a cloud service such as scaling, load balancing, and types of cloud provider services.

Table Of Contents

Sessions

Imagine that we have a mobile banking application. Whenever the app makes a request to our server, the servlet has to make sure that that user had already logged in before processing it, but we also don't want to have to log the user in on every request. On top of that, the user could be using the app while travelling and happened to enter a tunnel and cause their connection to the server to drop. When they emerge on the other side and send their next request, it will be using the a brand new HTTP request but we still trust the client that it originated from and want to let it continue from where it left off.

The way this is done in HTTP is through the use of Sessions where the server or servlet keeps track for the state of the conversation between it and a particular user across multiple HTTP requests. Some considerations when creating sessions:

- How long do we maintain a session? Sessions that stay active for too long are going to take up resources that will not be available to other clients. We might want clients to log in often due to security concerns.

- What information about the sessions should we store?

We typically also want to use some sort of authentication framework to strongly authenticate mobile clients to ensure that once a session has begun, there is no way for a third party to intercept our session data and exploit the server or client's data in some way.

Many battle hardened and continuously audited session tracking libraries exist in the wild and we should rarely ever try to reinvent the wheel. These libraries typically track sessions by sending some form of cookie with session information to the client to help the server with the session. The amount of information sent to the client varies depending on the sensitivity of your session data. For highly sensitive data, we can simply send a unique session identifier to the client while storing everything else on the server. In most cases, we can take a hybrid approach by storing some things on the client, and only highly sensitive data on the server side.

Scaling

Let's say that you build the next Instagram and your cloud application sees an exponential growth in active users, going from thousands to millions of users daily. Is your application going to be able to handle all that traffic? Are your cloud services going to be able to scale up to support that?

Horizontal Scaling VS Vertical Scaling

We could start off with a cheap lower performance server, and as activity increases on our app, we upgrade that single server to have higher performance. This idea of continually getting higher performance servers is called vertical scaling. However, at some point we will reach a traffic load where there isn't a single machine that is powerful enough to support it. For instance, there is no way Google would be able to support all of their search operations on a single computer. Instead, we can add more machines as our traffic increases to evenly distribute the traffic load, and we can continue to add as many machines as is needed. This scenario is called horizontal scaling, and it is the ideal scaling method for cloud services.

However, horizontal scaling is not necessarily easy, and there are often situations where inefficiencies in our code such as architectural design choices can prevent us from taking full advantage of the benefit of a new machine coming online. Thus, applications need to be designed with horizontal scaling it in mind. One such consideration is to make our applications, as stateless as possible.

Statefulness VS Statelessness

When a server has to keep details about its conversation with the client such as caches and login information in memory, that is a Stateful application. When horizontally scaling a stateful application, we need to consider if we want to migrate some of our existing clients to that new machine. If we have some important state information that needs to be migrated as well, how do we do it and then bootstrap the state of the machine to match the others efficiently?

In contrast, when a server doesn't have to remember anything, and a request can be sent to any server running the application, that is called a Stateless application. We can essentially just turn on a new machine and immediately increase the capacity of our application. Thus, statelessness is a property in our applications that we try to achieve when building highly scalable applications.

Load Balancing Stateless and Stateful Applications

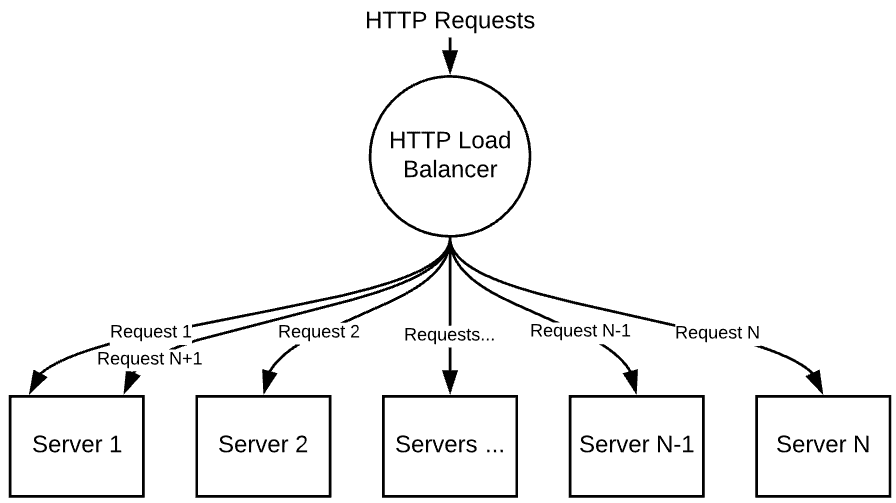

Cloud applications typically run on multiple servers, and a big concern is deciding on which server to send each request it. Similar to how web containers use web.xml to route to their internal servlets, there are HTTP load balancers that look at the load on each server and figure out how to allocate requests to each server.

The simplest load balancing method is to use a round robin scheme, where all servers are forwarded requests in turn until they have all received a request, and then the process starts over.

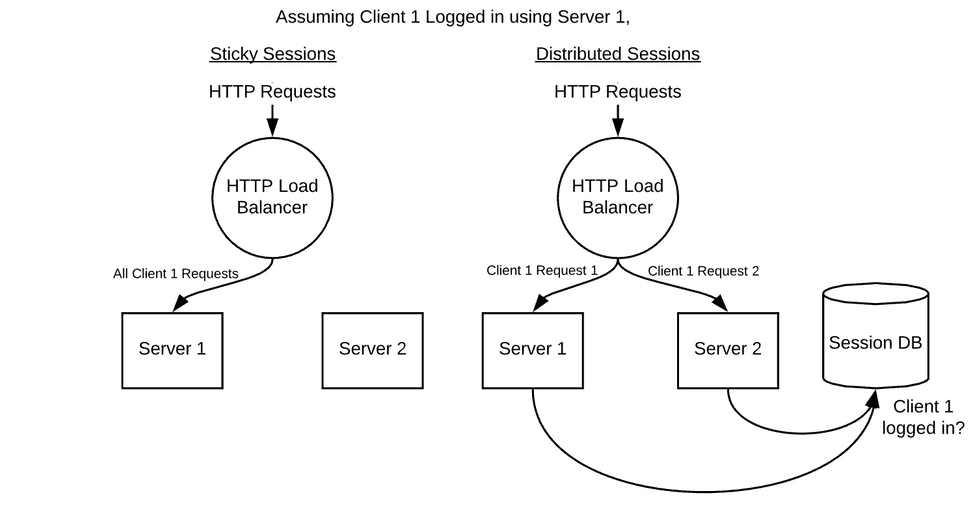

However, this method requires each servlet running on the servers to be stateless and not care about which client's request they are handling at any point in time. This can cause problems even in simple scenarios such as when users try to log in. If the user logs in to Server 1, but then their next request gets routed to Server 2, how is Server 2 supposed to know that the user had previously logged in somewhere else? Thus, there needs to be a way to either share state between every server or route requests more intelligently. This is called Session Management.

One way to fix this problem is to use what are called Sticky Sessions. The load balancer can remember the server each client was first routed to, and then always route the same clients to the same server for the duration of their sessions. This can be implemented effectively as a round robin of user sessions rather than individual requests.

Another way of doing it is through Distributed Sessions. This is where each server is effectively stateless, but there is a central database where the information for each session is kept. So whenever a request arrives at the load balancer, it can forward the request to any server, and then the server can check with the central database to see if the user had already logged in.

Auto-scaling

One of the benefits of horizontal scaling in the Cloud, is that we can take advantage of its built in elasticity to help us reduce costs and also be able to response to fluctuations in traffic automatically. While the use cloud service providers lets us not worry about buying hardware and setting up infrastructure to meet demand, we still need to figure out when and how we want to add capacity to our application. Even though we can add or remove capacity very quickly, we need some way to monitor our resource use to optimize it.

If we know what the expected behavior of each server is expected to be, we can measure properties such as response times for requests, or the CPU and memory load. We can implement some sort of intelligence to figure out when to add or remove capacity using the measurements and then interact with the cloud provider as needed. This is called auto-scaling.

Because there is still a limit to how fast capacity can be allocated in response to demand due to time needed for servers to boot up, applications initialize and any other configuration that is needed. Any intelligence that we build should be able to forecast what the load is going to be in the next few minutes or hours in order to turn servers on in time to meet demand.

Another benefit of auto-scaling is if one of the servers were to fail, the auto-scaler would be able to recognize it and automatically allocate a replacement. This is another reason statelessness is so important, it allows us to easily add machines when another fails and think about our capacity in aggregate.

IaaS VS PaaS

When building an application for deployment into the cloud, one should also consider the style of service that should be used as it would have a big impact on the operational aspect of the application. These services typically take the form of Infrastructure as a Service (IaaS) or Platform as a Service (PaaS), though there are some hybrid services as well.

IaaS is the closest thing to buying a new machine in the cloud. Here a cloud provider provides a virtual machine that typically configuration that you are familiar with, and you stack on top of it all the different components (such as controllers, databases) and utilities that your application needs. This gives you a great deal of control over all the pieces that go into your virtual machine such as what the controllers can access in the underlying machine or even mixing and matching programs written in different languages built just for that Linux virtual machine you're running on.

All of this flexibility also means that you are also responsible for defining your own auto-scaling policies and building your own infrastructure to set up, monitoring and management of these virtual machines.

PaaS on the other hand, takes away complexity of IaaS by automatically configuring the web container, the underlying virtual machine, security settings, auto-scaling, capacity needs etc. Instead, they provide all the tools you need to support the actual execution of you application that are tightly coupled to the platform. These can be tools such as collecting the request logs or monitoring the performance of how your application is interacting with the database across all of your machines.

The downside then, is that the application has to be written to live within the confines of what the service provider will allow, thus sacrificing overall configurability.